Full GitHub Source

Concept

A framework for maintaining client side business object cache consistency with database update “side effects”.

Prerequisites

-

Stored procedures = business rules

yep, i said it… My data layer is basically a home grown spin on ADO.Net Typed DataSets i.e. “Business” Class wrappers around ADO.Net DataTables. I like to keep the majority of my business rules in stored procedures (“procs”). I’ve experienced sustained, maintainable code velocity on real world LOB projects by evolving a relational model and the views & stored procedures on top of it. It’s often beneficial to meet growing awareness of business entity relationship requirements entirely in the proc queries with no changes necessary in higher layers. Being able to refactor how a particular list is populated WITHOUT REQUIRING A BINARY RELEASE can be very powerful. I realize this may all seem controversial to an OO mindset but it’s served me well over multiple database oriented projects. If your project is not inherently table oriented, please stop right here. This is very much a relationally oriented design approach. If one is fortunate enough to have the freedom to design the database as part of the overall solution scope and therefore stored procedures are fair game, then to not take advantage of procs as “business methods”, is throwing away a huge asset. If one is not that lucky, and I realize big corporate projects tend not to be, then I completely understand taking great OO measures to insulate one’s beautiful architecture away from the messy legacy database structure. EntityFramework welcomes you :) Otherwise, I feel that remaining near and dear to ones mother database is a very fruitful relationship. Procs are easily maintainable and deployable – no binaries, very scriptable, very DevOps’y.

- Naturally, accepting dependence on a database for business rules does imply that our application must be generally connected, to a database. One could argue this doesn’t fly for disconnected client scenarios, i.e. mobile device. However, it’s not far fetched to have a local database which provides this support which then updates to the big mother database (cloud, etc) when connectivity is restored. One could still leverage the readily deployable nature of stored procs to provide the right business smarts to the local DB. Indeed, a tiered relational centric model vs typical tiered OO centric architectures which relegate relational technology to the last tier only :)

- MS SQL Server 2005+ – This post includes the usage of the SS 2005+ “OUTPUT” syntax. I’d be interested to know whether other DB’s support this but it’s more of a convenience and possibly mild performance benefit vs critical requirement.

Business Example

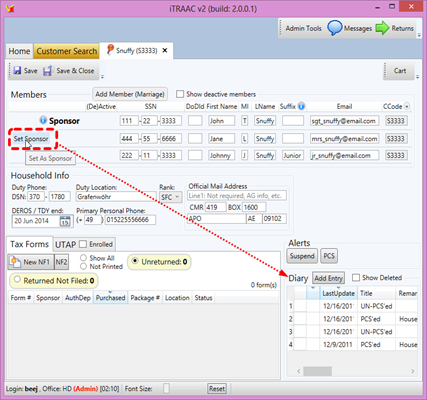

To frame a case which demonstrates the need for typical business requirements driven side effects, take a look at the adjacent screenshot.

In this scenario there is a household with some people in it (aka members or clients). In this business domain only one person can be the sponsor of a household at any given time. Likewise there can be only one spouse set, the spouse which is not the sponsor. These designations are maintained as flags on the Clients database table. In this example, we’re exploring what needs to happen when the sponsor changes from one person to another. This can happen when the existing sponsor leaves the business system which grants this privilege, yet the spouse remains in the system and can therefore assume the sponsorship privilege and nothing else needs to change.

So, in the pictured UI, the current sponsor is Sgt. John Snuffy. To effect this desired change, the user would select the “Set Sponsor” button on the spouse entry (Mrs. Jane Snuffy). As is typical tiered design, this button fires a Business Object method – SetSponsor(…)

By design, my Business Class methods tend to be fairly light wrappers around proc calls. For example:

public void SetSponsor(string NewSponsorClientGUID, bool FixExistingPackageLinks)

{

using (iTRAACProc Sponsor_SetSponsor = new iTRAACProc("Sponsor_SetSponsor"))

{

Sponsor_SetSponsor["@SponsorGUID"] = GUID;

Sponsor_SetSponsor["@NewSponsorClientGUID"] = NewSponsorClientGUID;

Sponsor_SetSponsor["@FixExistingPackageLinks"] = FixExistingPackageLinks;

TableCache(Sponsor_SetSponsor);

HouseMembers = HouseMembers; //for some reason OnPropertyChanged("HouseMembers") didn't refresh the Members Grid, i don't have a good guess but this little hack worked immediately so i'm moving on

}

}

Line #8 above is the huckleberry. The TableCache method is implemented in the BusinessBase class… it fires the sproc and then goes into the DataSet.Merge() logic explained below…

While we’re looking at this code, let me quickly divert to explain the “Proc” class . Nutshell: Proc is a convenient wrapper around ADO.Net SqlCommand. Among other things it does the SqlCommandBuilder.DeriveParameters() + caching thing that you’ll find in many similar wrappers like this (e.g. Microsoft’s Data Access Application Block – I just didn’t fall in love with their API and wanted my own spin). DeriveParameters() removes the dreary burden of all that boring proc parm definition boilerplate code prior to each proc call (add param by name, set the datatype, etc.) and just pulls all that out of the database metadata that already knows all that information anyway – brilliant. Therefore we get right to the point of assigning values to named proc parms and firing the query. SqlClientHelpders.cs contains the Proc class as well as all kinds of data helper methods that have evolved over several projects. I wouldn’t want to start a database project without it at this point.

iTRAAC is the name of the project I pulled this example from. iTRAACProc is a very light subclass that assigns a few common domain specific parms (e.g. UserID) before handing off to the base Proc class. Conveniently, the Proc class’ parm[“@name”] indexer ignores anything that’s not declared on the specified proc, so only procs that actually require these parms will receive them.

Ok so back to our scenario… Besides setting the flag on Jane’s record to indicate she is now the sponsor, we also need to remove the sponsorship flag from John as well as flip the spouse flag from Jane to John (other queries and reports depend on having those flags consistent)… and oh, by the way, we also want to log all of this to the audit table so there’s a historical reference of what changes brought us to the current state of a household. We want to drive all of this from the database proc logic and once the database has changed we want the UI to magically update to reflect all these changes and additions (including the new audit record aka “Diary” in the UI). So this is where we’ve arrived at what I call side effects (maybe there’s a better term?). That is – corresponding to a relatively innocent looking user action, our desired business rules will drive various values to be changed and entirely new rows to be added that are not directly maintained by the user. This is not simple CRUD table maintenance, this is real business rules with all the crazy interconnections that must be supported :)

Update-proc example (full source):

SET @TableNames = 'Client'

UPDATE iTRAAC.dbo.tblClients

SET StatusFlags = CASE WHEN RowGUID = @NewSponsorClientGUID THEN StatusFlags | POWER(2,0)

ELSE StatusFlags & ~POWER(2,0) END

OUTPUT INSERTED.RowGUID, CONVERT(BIT, INSERTED.StatusFlags & POWER(2,0)) AS IsSponsor

WHERE SponsorGUID = @SponsorGUID

AND RowGUID IN (@OldSponsorClientGUID, @NewSponsorClientGUID)

Line #1 is pertinent. By convention, all procs which need to participate in the approach I’m proposing in this post, must have a @TableNames OUTPUT parameter. This is a CSV list of table names corresponding to each resultset returned from the proc (in sequential order). This way, the proc generically informs the datalayer what must be merged into the client data cache (repository).

UPDATE-OUTPUT syntax kungfu =)

Line #5 above is cool – rather than reSELECTing the modified data…OUTPUT lets us leverage that UPDATE already knows what rows it hit. I dig it. Back on the client side, the datalayer takes that PARTIAL (i.e. very column specific) result-set and Merges back it into the cache like so (full source):

//nugget: DataSet.Merge(DataTable) has become a real linchpin in the whole data roundtrip approach

//nugget: in a nutshell, update procs return a bare minimum of updated fields in a return resultset along with a corresponding CSV list of @TableNames

DataTable cachedTable = dsCache.Tables[tableName];

dsCache.Merge(incomingTable, false, (cachedTable == null) ? MissingSchemaAction.AddWithKey : MissingSchemaAction.Ignore); //PreserveChanges pretty much has to be false in order to count on what comes back getting slammed in

The Big Picture

What this approach tees up is that your procs can drive an unlimited amount of side effects which can be granularly returned to the client side cache.

Since you can pick and choose exactly which columns are returned (via standard selects or OUTPUT clause) you can weave a fine tuned blend between exactly which fields are allowed to come back in the side effects and blast into the client cache vs what fields may have pending uncommitted user edits in the cache. That’s pretty cool.

View->ViewModel (MVVM) environments with robust declarative databinding, like WPF, really shine when you see all of these side effects immediately manifest on the UI just by bringing the data back into the BusinessObject(DataSet) cache (that the UI is bound to). The procs are very much in control of the business logic and ultimately what’s displayed, yet without being coupled to the UI. Great stuff.

Additional perks in the code provided:

- An interesting “union-like” benefit in the datalayer – I ran into requirements where the most appealing clean design was to modularize subroutine procs that would be called from other procs. Fair enough so far. On top of that I found need to return these field level data changes (aka side effects) for the same entity table, from multiple procs in the subroutine chain. e.g. Client -> Proc1 -> SubProc2 & SubProc3. The impact of burdening the T-SQL proc layer with capturing the multiple proc results and union’ing them together is ugly design. It wound up being very clean and convenient to defer the union of these multiple selects to the TableCache C# datalayer logic. The “union” effect is readily implemented by looping through the tables of the same name and using ADO.Net’s “DataTable.merge()” to apply each incoming rowset to the existing entities in the repository cache. Including matching primary keys in the incoming rowsets facilitates updates to cached entities vs inserts.

- Handy initial client side rows – I should say, this next bit is actually a technique that’s struck me as convenient yet it’s not specifically dependent on the TableCache approach … these building blocks do all however play into each other to nicely address what I’ll call the “new row dilemma” … that is, one typically needs some blank rows to databind to when you’re creating a new record in the UI… but it’s often undesirable to physically manifest these rows in the database until you’re sure they’re really going to be committed… it really stinks to sacrifice data integrity constraints just to allow for initial empty rows… a typical solution is to DataTable.Rows.AddRow() on the client and leave the DB out of it until you commit fully validated rows… but now client code is responsible for initializing new rows. I hate that for a couple reasons. First, I want that logic in the procs, where I can evolve it at will at the database tier w/o needing to deploy a new client binary. Secondly, for client logic consistency, it’s much cleaner for new row logic to work exactly the same way as existing row logic. So the execution goes something like this:

- New row event on client generates a brand new GUID PK (Some form of very unique ID seem fairly necessary to allow the client to do this autonomously from the DB

- But otherwise the client logic just flows into the standard “GetEntityByID” proc call, passing the new GUID none the wiser whether it’s new or existing… i.e. zero logic flow difference between new record and vs existing record, nirvana :).

- Of course this fresh GUID won’t get a row hit which conditionally falls into the new row logic where I return a “fake” row populated with whatever defaults I desire… take note, I’m not actually inserting a row into the table and then selecting that back out, I’m doing a select with “hard coded” values and no “from table” clause… that way I don't insert junk data nor forsake constraints, but the new row logic is kept in the proc layer – beautiful.

- Lastly, when committing to the DB, you fire the typical upsert proc which checks if it’s doing an insert or update by seeing if the PK exists and acting accordingly.